RAID, performance tests

(→Notes) |

|||

| Line 3: | Line 3: | ||

[[image:gmirror-performance.png | Click for raw data and test equipment information]] | [[image:gmirror-performance.png | Click for raw data and test equipment information]] | ||

| − | [[Gmirror]], unfortunately, is not doing very well at this time - at least not on the amd64 platform tested. A 2-drive gmirror performed grossly poorer than even a single baseline drive, with 3-drive and 5-drive gmirrors outperforming the baseline 250GB drive tested but being handily beaten by both the 500GB baseline drive and the Nvidia onboard RAID1 implementation | + | [[Gmirror]], unfortunately, is not doing very well at this time - at least not on the amd64 platform tested. A 2-drive gmirror performed grossly poorer than even a single baseline drive, with 3-drive and 5-drive gmirrors outperforming the baseline 250GB drive tested but being handily beaten by both the 500GB baseline drive and the Nvidia onboard RAID1 implementation. |

Only results for the '''round-robin''' balance algorithm are shown here, because it was the clear performance winner across the board for [[gmirror]] implementations. The '''split''' (slice size 128K) and '''load''' algorithms were also tested, and raw data for the '''split''' algorithm is available on the image page itself. The '''load''' algorithm was showing even worse performance than '''split''' and thus was not fully tested. | Only results for the '''round-robin''' balance algorithm are shown here, because it was the clear performance winner across the board for [[gmirror]] implementations. The '''split''' (slice size 128K) and '''load''' algorithms were also tested, and raw data for the '''split''' algorithm is available on the image page itself. The '''load''' algorithm was showing even worse performance than '''split''' and thus was not fully tested. | ||

| + | |||

| + | It is interesting to note that the Nvidia onboard RAID1 implementation does not accelerate single-process copies, and also suffers from a '''very''' significant variation in how it handles simultaneous processes - in the 5-process simultaneous copy, a full 38 seconds elapsed between the finish of the first process and the fifth. If you can live with the odd variation in how quickly it handles tasks that should have the same priority, however, in terms of sheer performance it is the clear winner among the RAID1 implementations tested. | ||

| + | |||

| + | The Promise TX-2300 RAID1 implementation was just plain poor - about on par with the 2-disk Gmirror implementation (which is slower than the baseline 250GB drive across the board), and well underneath the 3-disk Gmirror implementation. | ||

Revision as of 00:23, 27 December 2007

Contents |

Gmirror Disk Performance

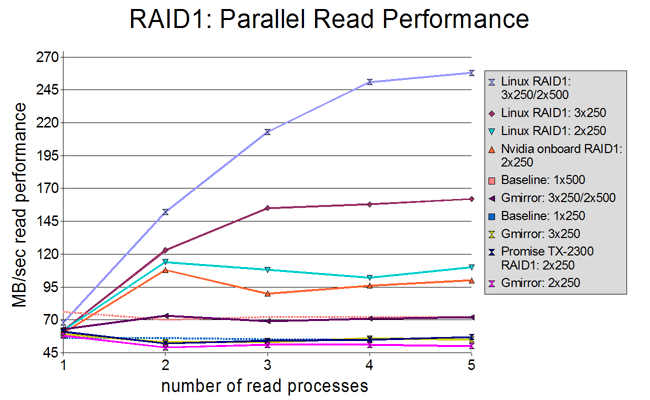

Gmirror, unfortunately, is not doing very well at this time - at least not on the amd64 platform tested. A 2-drive gmirror performed grossly poorer than even a single baseline drive, with 3-drive and 5-drive gmirrors outperforming the baseline 250GB drive tested but being handily beaten by both the 500GB baseline drive and the Nvidia onboard RAID1 implementation.

Only results for the round-robin balance algorithm are shown here, because it was the clear performance winner across the board for gmirror implementations. The split (slice size 128K) and load algorithms were also tested, and raw data for the split algorithm is available on the image page itself. The load algorithm was showing even worse performance than split and thus was not fully tested.

It is interesting to note that the Nvidia onboard RAID1 implementation does not accelerate single-process copies, and also suffers from a very significant variation in how it handles simultaneous processes - in the 5-process simultaneous copy, a full 38 seconds elapsed between the finish of the first process and the fifth. If you can live with the odd variation in how quickly it handles tasks that should have the same priority, however, in terms of sheer performance it is the clear winner among the RAID1 implementations tested.

The Promise TX-2300 RAID1 implementation was just plain poor - about on par with the 2-disk Gmirror implementation (which is slower than the baseline 250GB drive across the board), and well underneath the 3-disk Gmirror implementation.

Graid3 Disk Performance

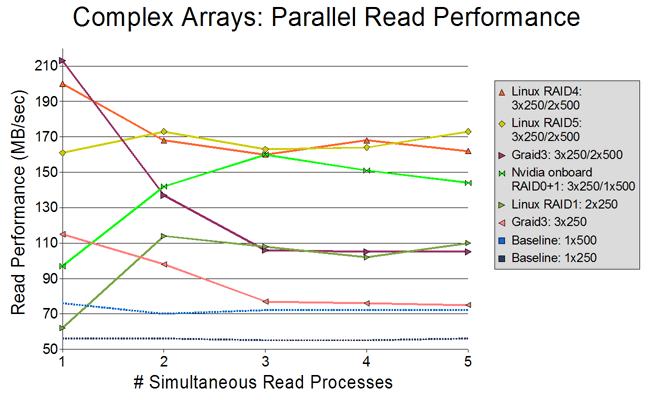

Graid3 is doing noticeably better than Gmirror. The 5-drive Graid3 implementation handily outperformed everything else tested, and while the 3-drive Graid3 implementation performed slightly slower than the Nvidia RAID1 in the 2-process and 3-process tests and significantly slower in the 4-process and 5-process tests, it's worth noting that it nearly doubled the Nvidia RAID1's single-process performance due to Nvidia's interesting failure to accelerate single-process copying at all.

Only results for the -R configuration (do not use parity drive for read operations from a healthy array) are shown here, because it outperformed the -r configuration (always use the parity member during reads) slightly to significantly in all but the 5-process test, in which it performed only very slightly worse. It is possible that a more massively parallel test (or a test of a much less contiguous filesystem) would show some advantage to -r, but for these tests, no advantage is apparent. Raw data is available on the image page itself.

Equipment

- FreeBSD 6.2-RELEASE (amd64)

- Athlon X2 5000+

- 2GB DDR2 SDRAM

- Nvidia nForce MCP51 SATA 300 onboard RAID controller

- Promise TX2300 SATA 300 RAID controller

- 3x Western Digital 250GB drives (WDC WD2500JS-22NCB1 10.02E02 SATA-300)

- 2x Western Digital 500GB drives (WDC WD5000AAKS-00YGA0 12.01C02 SATA-300)

Methodology

The read-ahead cache was changed from the default value of 8 to 128 for all tests performed, using sysctl -w vfs.read_max=128. Initial testing showed that dramatic performance increases occurred for all tested configurations, including baseline single-drive, with increases of vfs.read_max. The value of 128 was arrived at by continuing to double vfs.read_max until no further significant performance increase was to be seen (at vfs.read_max=256) and backing down to the last value tried.

For the actual testing, 5 individual 3200MB files were created on each tested device or array using dd if=/dev/random bs=16m count=200 as random1.bin - random5.bin. These files were then cp'ed from the device or array to /dev/null. Elapsed times were generated by echoing a timestamp immediately before beginning the test and immediately at the end of each individual process, and subtracting the beginning timestamp from the last completed timestamp. Speeds shown are simply the amount of data in MB copied to /dev/null (3200, 6400, 9600, 12800, or 16000) divided by the total elapsed time.

Notes

The methodology used produces a very highly contiguous filesystem, which may skew results significantly higher than in some real-world settings - particularly in the single-process test. Presumably the multiple process copy tests, particular towards the end of the scale, would be much less affected by fragmentation in real-world filesystems, since by their nature they require a significant amount of drive seeks between blocks of the individual files being copied throughout the test.

In the 5-drive Graid3 array tested, the (significantly faster) 500GB drives were positioned as the last two elements of the array. This is significant particularly because this means the parity drive was noticeably faster than 3 of the 4 data drives in this configuration; some other testing on equipment not listed here leads me to believe that this had a favorable impact when using the -r configuration. Significantly, however, there was not enough of an improvement to make the -r results worth including on the graph.

Write performance was also tested on each of the devices and arrays listed and will be included in graphs at a later date (for now, raw data is available in the discussion page).

Googling "gmirror performance" and "gmirror slow" did not get me much of a return; just one other individual wondering why his gmirror was so abominably slow - so at some point I will endeavor to reformat the test system with 6.2-RELEASE (i386) and see if the gmirror results improve. It strikes me as very odd that graid3 with only 3 drives (therefore only 2 data drives) outperforms even a five-drive gmirror implementation.