RAID, performance tests

From FreeBSDwiki

(Difference between revisions)

| Line 1: | Line 1: | ||

{{stub}} | {{stub}} | ||

| + | |||

| + | [[image:gmirror-performance.png | Click for raw data and test equipment information]] | ||

| + | |||

| + | [[image:graid3-performance.png | Click for raw data and test equipment information]] | ||

Equipment: | Equipment: | ||

| Line 23: | Line 27: | ||

big enough chunks of data to ever '''once''' hit the disk instead of the cache! | big enough chunks of data to ever '''once''' hit the disk instead of the cache! | ||

| − | + | Ancillary data: | |

| − | + | ||

| − | + | ||

'''write performance (1 process)''' | '''write performance (1 process)''' | ||

5 250GB/500GB, graid3 : 153 MB/s | 5 250GB/500GB, graid3 : 153 MB/s | ||

| Line 92: | Line 94: | ||

3 250GB disks, gmirror split 128K : MB/s | 3 250GB disks, gmirror split 128K : MB/s | ||

| − | + | '''vfs.read_max=8, 2 parallel cp processes''' | |

| − | + | 3 250GB disks, gmirror round-robin : 31 MB/s | |

| − | + | 1 250GB disk : 27 MB/s | |

| − | + | 2 250GB disks, gmirror round-robin : 23 MB/s | |

| − | + | ||

Preliminary conclusions: | Preliminary conclusions: | ||

system default of vfs.read_max=8 is insufficient for ANY configuration, including vanilla single-drive | system default of vfs.read_max=8 is insufficient for ANY configuration, including vanilla single-drive | ||

| − | gmirror read performance sucks | + | gmirror read performance sucks - Promise read performance sucks - nvidia read performance sucks for single-process |

| − | + | ||

graid3 is the clear performance king here and offers very significant write performance increase as well | graid3 is the clear performance king here and offers very significant write performance increase as well | ||

| − | SATA-II | + | SATA-II seems to offer significant performance increases over SATA-I on large arrays |

Revision as of 22:12, 26 December 2007

Click for raw data and test equipment information

Equipment:

Athlon X2 5000+

3x Western Digital 250GB drives (WDC WD2500JS-22NCB1 10.02E02 SATA-300)

2x Western Digital 500GB drives (WDC WD5000AAKS-00YGA0 12.01C02)

Nvidia nForce onboard RAID controller, Promise TX2300 RAID controller

Athlon 64 3500+

5x Seagate 750GB drives (Seagate ST3750640NS 3.AEE SATA-150)

Nvidia nForce onboard RAID controller

Procedure:

/usr/bin/time -h measuring simultaneous cp of 3.1GB files to /dev/null

files generated with dd if=/dev/random bs=16M count=200

simultaneous cp processes use physically separate files

write performance tested with dd if/dev/zero bs=16M count=200

sysctl -w vfs.read_max=128 unless otherwise stated

Notes:

system default of vfs.read_max=8 bonnie++ was flirted with, but couldn't figure out how to make it read big enough chunks of data to ever once hit the disk instead of the cache!

Ancillary data:

write performance (1 process) 5 250GB/500GB, graid3 : 153 MB/s 5 250GB/500GB, graid3 -r : 142 MB/s 1 500GB drive : 72 MB/s 1 WD Raptor 74GB drive (Cygwin) : 60 MB/s 1 250GB drive : 58 MB/s 5 250GB/500GB, gmirror round-robin : 49 MB/s 5 250GB/500GB, gmirror split 128k : 49 MB/s

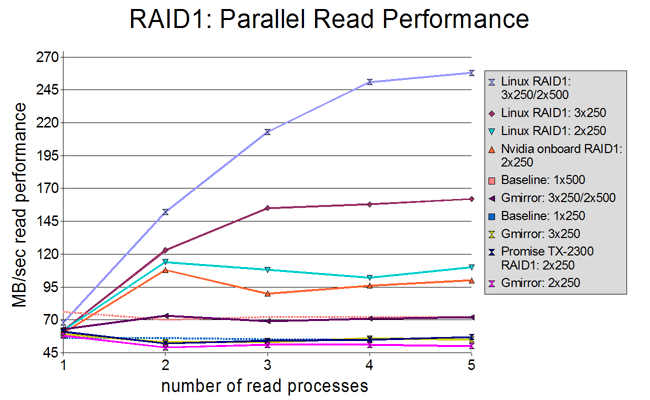

1 process 5 250GB/500GB, graid3 : 213 MB/s (dips down to 160MB/sec) 5 750GB disks, graid3 : 152 MB/s (wildly fluctuating 120MB/s-200MB/s) 3 250GB disks, graid3 : 114 MB/s (dips down to 90MB/sec) 1 500GB disk : 76 MB/s 1 750GB disk : 65 MB/s (60MB/s-70MB/s) 5 250GB/500GB, gmirror round-robin : 63 MB/s 3 250GB disks, gmirror round-robin : 59 MB/s 1 250GB disk : 56 MB/s (very little variation) 3 250GB disks, gmirror split 128K : 55 MB/s 5 250GB/500GB, gmirror split 128K : 54 MB/s

2 processes 5 250GB/500GB, graid3 : 128 MB/s (peak: 155+ MB/sec) 5 750GB disks, graid3 : 125 MB/s (peak: 140+ MB/sec) 3 250GB disks, graid3 : 98 MB/s (peak: 130+ MB/sec) 3 250GB disks, graid3 -r : 88 MB/s (peak: 120+ MB/sec) 2 250GB disks, nVidia onboard RAID1 : 81 MB/s (peak: 120+ MB/sec) 5 250GB/500GB, gmirror round-robin : 73 MB/s 2 250GB disks, Promise TX2300 RAID1 : 70 MB/s (peak: 100+ MB/sec) 1 500GB disk : 70 MB/s 1 250GB disk : 56 MB/s (peak: 60+ MB/sec) 2 250GB disks, gmirror round-robin : 55 MB/s (peak: 65+ MB/sec) 3 250GB disks, gmirror round-robin : 53 MB/s 5 250GB/500GB, gmirror split 128K : 50 MB/s 3 250GB disks, gmirror split 128K : 46 MB/s

3 processes 5 250GB/500GB, graid3 : 106 MB/s (peak: 130+ MB/sec low: 90+ MB/sec) 5 250GB/500GB, graid3 -r : 103 MB/s (peak: 120+ MB/sec low: 80+ MB/sec) 1 500GB disk : 72 MB/s 5 250GB/500GB, gmirror round-robin : 69 MB/s 1 250GB disk : 55 MB/s 3 250GB disks, gmirror round-robin : 53 MB/s 3 250GB disks, gmirror split 128K : 49 MB/s 5 250GB/500GB, gmirror split 128K : 47 MB/s

4 processes 5 250GB/500GB, graid3 : 105 MB/s (peak: 130+ MB/sec low: 90+ MB/sec) 5 250GB/500GB, graid3 -r : 105 MB/s (peak: 120+ MB/sec low: 80+ MB/sec) 1 500GB disk : 72 MB/s 5 250GB/500GB, gmirror round-robin : 71 MB/s (peak: 75+ MB/sec low: 64+ MB/sec) 3 250GB disks, gmirror round-robin : 65 MB/s 1 250GB disk : 55 MB/s 3 250GB disks, gmirror split 128K : 55 MB/s 5 250GB/500GB, gmirror split 128K : 47 MB/s (peak: 59+ MB/sec low: 31+ MB/sec)

5 processes 5 250GB/500GB, graid3 -r : 107 MB/s (peak: 120+ MB/sec low: 80+ MB/sec) 5 250GB/500GB, graid3 : 105 MB/s (peak: 130+ MB/sec low: 90+ MB/sec) 5 250GB/500GB, gmirror round-robin : 72 MB/s (peak: 80+ MB/sec low: 67+ MB/sec) 1 500GB disk : 72 MB/s 1 250GB disk : 56 MB/s 5 250GB/500GB, gmirror split 128K : 47 MB/s (peak: 60+ MB/sec low: 35+ MB/sec) 3 250GB disks, gmirror round-robin : MB/s 3 250GB disks, gmirror split 128K : MB/s

vfs.read_max=8, 2 parallel cp processes 3 250GB disks, gmirror round-robin : 31 MB/s 1 250GB disk : 27 MB/s 2 250GB disks, gmirror round-robin : 23 MB/s

Preliminary conclusions:

system default of vfs.read_max=8 is insufficient for ANY configuration, including vanilla single-drive gmirror read performance sucks - Promise read performance sucks - nvidia read performance sucks for single-process graid3 is the clear performance king here and offers very significant write performance increase as well SATA-II seems to offer significant performance increases over SATA-I on large arrays